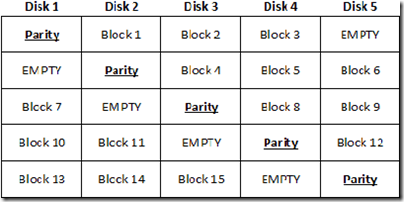

For several years now RAID 5 has been one of the most popular RAID implementations. Just about every vendor that supplies storage to enterprise data centers offers it, and in many cases it has become — deservedly – a well-trusted tool. RAID 5 stripes parity and blocks of data across all disks in the RAID set. Even though users now must devote about 20% of their disk space to the parity stripe, and even though read performance for large blocks may be somewhat diminished and writes may be slower due to the calculations associated with the parity data, few managers have questioned RAID 5’s usefulness.

For several years now RAID 5 has been one of the most popular RAID implementations. Just about every vendor that supplies storage to enterprise data centers offers it, and in many cases it has become — deservedly – a well-trusted tool. RAID 5 stripes parity and blocks of data across all disks in the RAID set. Even though users now must devote about 20% of their disk space to the parity stripe, and even though read performance for large blocks may be somewhat diminished and writes may be slower due to the calculations associated with the parity data, few managers have questioned RAID 5’s usefulness.

There are however, two major drawbacks associated with using RAID 5. First, while it offers good data protection because it stripes parity information across all the discs within the RAID set, it also suffers hugely from the fact that should a single disc within the RAID set fail for any reason, the entire array becomes vulnerable — lose a second disc before the first has been repaired and you lose all your data, irretrievably.

This leads directly to the second problem. Because RAID 5 offers no protection whatsoever once the first disc has died, IT managers using that technology have faced a classic Hobson’s choice when they lose a disc in their array. The choices are these. Do they take the system off-line, making the data unavailable to the processes that require it? Do they rebuild the faulty drive while the disc is still online, imposing a painful performance hit on the processes that access it? Or, do they take a chance, hold their breath, and leave the drive in production until things slow down during the third shift when they can bring the system down and rebuild it without impacting too many users?

This choice, however, is not the problem, but the problem’s symptom.

The parity calculations for RAID 5 are quite sophisticated and time consuming, and they must be completely redone when a disk is rebuilt. But it’s not the sophistication of all that math that drags out the process, but the fact that when the disk is rebuilt, parity calculations must be made for every block on the disk, whether or not those blocks actually contained data before the problem occurred. In every sense, the disk is rebuilt from scratch.

An unfortunate and very dirty fact of life about RAID 5 is that if a RAID set contains, say, a billion sectors spread over the array, the demise of even a single sector means the whole array must be rebuilt. This wasn’t much of a problem when disks were a few gigabytes in size. Obviously though, as disks get bigger more blocks must be accounted for and more calculations will be required. Unfortunately, using present technology RAID recovery speed is going to be constant irrespective of drive size, which means that rebuilds will get slower as drives get larger. Already that problem is becoming acute. With half-terabyte disks becoming increasingly common in the data center, and with the expected general availability of terabyte-sized disks this fall, the dilemma will only get worse.

The solution offered by most vendors is RAID 6.

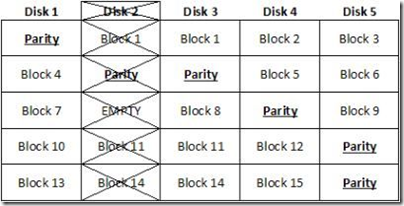

The vendors would have you believe that RAID 6 is like RAID 5 on steroids: it eliminates RAID 5’s major drawback – the inability to survive a second disk failure – by providing a second parity stripe. Using steroids of course comes with its own set of problems.

RAID 6 gives us a second parity stripe. The purpose of doing all of the extra math to support this dual parity is that the second parity stripe operates as a “redundancy” or high availability calculation, ensuring that even if the parity data on the bad disk is lost, the second parity stripe will be there to ensure the integrity of the RAID set. There can be no question that this works. Buyers should, however, question whether or not this added safety is worth the price.

Consider three issues. RAID 6 offers significant added protection, but let’s also understand how it does what it does, and what the consequences are. RAID 6’s parity calculations are entirely separate from the ones done for the RAID 5 stripe, and go on simultaneously with the RAID 5 parity calculations. This calculation does not protect the original parity stripe, but rather, creates a new one. It does nothing to protect against first disk failure.

Because calculations for this RAID 6 parity stripe are more complicated than are those for RAID 5, the workload for the processor on the RAID controller is actually somewhat more than double. How much of a problem that turns out to be will depend on the site and performance demands of the application being supported. In some cases the performance hit will be something sites will live with, however grudgingly. In other cases, the tolerance for slower write operations will be a lot lower. Buyers must balance the increased protection against the penalty of decreased performance.

Issue two has to do with the nature of RAID 5 and RAID 6 failures.

The most frequent cause of a RAID 5 failure is that a second disk in the RAID set fails during reconstruction of a failed drive. Most typically this will be due to either media error, device error, or operator error during the reconstruction – should that happen, the entire reconstruction fails. With RAID 6, after the first device fails the device is running as a RAID 5, deferring but not removing the problems associated with RAID 5. When it is time to do the rebuild, all the RAID 5 choices and rebuild penalties remain. While RAID 6 adds protection, it does nothing to alleviate the performance penalty imposed during those rebuilds.

Need a more concrete reason not to accept RAID 6 at face value as the panacea your vendor says it is? Try this.

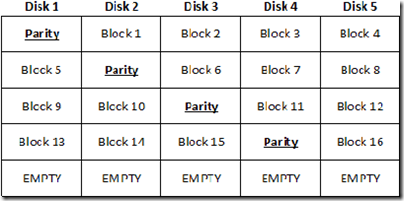

When writing a second parity stripe, we of course lose about the same amount of disk space as we did when writing the first (assuming the same number of disks are in each RAID group). This means that when implementing RAID 6, we are voluntarily reducing disk storage space to about 60% of purchased capacity (as opposed to 80% with RAID 5). The result: in order to meet anticipated data growth, in a RAID 6 environment we must always buy added hardware.

This is the point at which many readers will sit back in their chairs and say to themselves, “So what? Disks are cheap!” And so they are — which naturally is one of the reasons storage administrators like them so much. But what if my reader is not in storage administrator? What if the reader is a data center manager, or an MIS director, or a CIO, or a CFO? In other words, what if my reader is as interested in operational expenditures as in the CAPEX?

In this case, the story becomes significantly different. Nobody knows exactly what the relationship between CAPEX and OPEX is in IT, but a rule of thumb seems to be that when it comes to storage hardware the OPEX will be 4-8 times the cost of the equipment itself. As a result, everybody has an eye on the OPEX. And these days we all know that a significant part of operational expenditures derives from the line items associated with data center power and cooling.

Because of the increasing expense of electricity, such sites are on notice that they will have to make do with what they already have when it comes to power consumption. Want to add some new hardware? Fine, but make sure it is more efficient than whatever it replaces.

When it comes to storage, I’m quite sure that we will see a new metric take hold. In addition to existing metrics for throughput and dollars-per-gigabyte, watts-per-gigabyte is something on which buyers will place increased emphasis. That figure, and not the cost of the disk, will be a repetitive expense that managers will have to live with for the life of whatever hardware they buy.

If you’re thinking of adding RAID 6 to your data protection mix, consider the down-stream costs as well as the product costs.

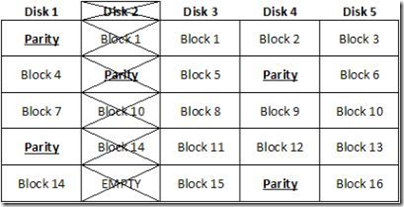

Does RAID 6 cure some problems? Sure, but it also creates others, and there are alternatives worth considering. One possibility is a multilevel RAID combining RAID 1 (mirroring) and RAID 0 (striped parity), usually called either RAID 10 or RAID 1+0. Another is the “non-traditional” RAID approach offered by vendors who build devices that protect data rather than disks. In such cases, RAID 5 and 6 would have no need for all those recalculations required for the unused parts of the disk during a rebuild.

![]() Highly Reliable Systems lately certified 4TB drives to be used within their RAIDPac detachable drives. Each RAIDPac consists of 3 SATA drives along with a RAID controller, supplying 12TB of detachable storage in each bay from the RAIDFrame backup solution.

Highly Reliable Systems lately certified 4TB drives to be used within their RAIDPac detachable drives. Each RAIDPac consists of 3 SATA drives along with a RAID controller, supplying 12TB of detachable storage in each bay from the RAIDFrame backup solution.

There are several items to consider when replacing a drive from a failed RAID. If you are building a new RAID, then all drives in the array should be the identical model if at all possible.

There are several items to consider when replacing a drive from a failed RAID. If you are building a new RAID, then all drives in the array should be the identical model if at all possible.